- Contact Us

- Client Portal

- Go To CreatoLabs™Coming Soon!

In the current AI zeitgeist, sequence models have skyrocketed in popularity for their ability to analyze data and predict what to do next. For instance, you’ve likely used next-token prediction models like ChatGPT, which anticipate each word (token) in a sequence to form answers to users’ queries. There are also full-sequence diffusion models like Sora, which convert words into dazzling, realistic visuals by successively “denoising” an entire video sequence.

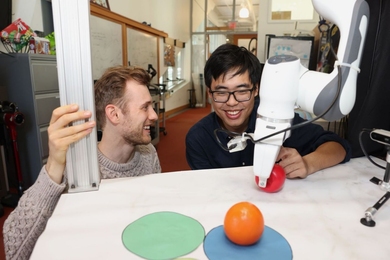

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have proposed a simple change to the diffusion training scheme that makes this sequence denoising considerably more flexible.

When applied to fields like computer vision and robotics, the next-token and full-sequence diffusion models have capability trade-offs. Next-token models can spit out sequences that vary in length. However, they make these generations while being unaware of desirable states in the far future — such as steering its sequence generation toward a certain goal 10 tokens away — and thus require additional mechanisms for long-horizon (long-term) planning. Diffusion models can perform such future-conditioned sampling, but lack the ability of next-token models to generate variable-length sequences.

Researchers from CSAIL want to combine the strengths of both models, so they created a sequence model training technique called “Diffusion Forcing.” The name comes from “Teacher Forcing,” the conventional training scheme that breaks down full sequence generation into the smaller, easier steps of next-token generation (much like a good teacher simplifying a complex concept).

Here’s How It Works:

1. Refer a Friend: Share the name and contact details of anyone who might benefit from our innovative web solutions.

2. They Sign a Deal: When your referral becomes a client and completes a deal with us, you get rewarded.

3. Get Paid: Receive $1,000 in cash as a thank you for your referral!

It’s that simple. Help your friends get the best in web design and development, and earn big while doing it. Start referring today and watch your rewards grow!

*Subject to IRS income tax rules and regulations

©2013-2024 | All rights reserved.